| Realistic expectations for your gigabit network |

Noah Davids

Stratus Support Engineer

Stratus Technologies

Realistic expectations for your gigabit network

The new Stratus® ftServer® V Series platform comes with only gigabit interfaces. True, they will run just fine at 10 or 100 mbps, but at those speeds you can saturate the network. Why not run them at 1000 mbps? There is no reason in the world why you shouldn't, and the increased throughput is a good reason why you should. However, if you expect all your transactions to race across the network at gigabit speeds, you will be disappointed. This article explains why you will not get gigabit speeds even though you have a gigabit network.

There are three different speeds to consider:

- The speed that an individual frame is transferred from the card to the network or from the network to the card

- The speed that a stream of frames can be transferred from the operating system to the network or from the network to the operating system

- The speed at which TCP conversation can be conducted.

An individual frame can indeed be transferred from the card to the network or the network to the card at gigabit speeds. If that were not the case, the card could not operate on a gigabit network. However, there are few environments where sending a single frame is useful.

The second speed is only applicable to UDP or ICMP protocols, with no inherent windowing or flow control. On the Stratus V Series system, there are two different limitations — one on the receiving side and one on the transmitting side.

The transmitting side has a limit of 350 Mb/sec, which is based on the bus speed. On the receiving side, the limit is 650 Mb/sec in VOS 15.0, and 750 Mb/sec in VOS 15.1. This is based on the speed at which data can be pushed up through the IP layer. You do not receive any improvement if you add more sockets, or processes, or even interfaces. These numbers will reflect the total output and input of the system. Note also that these numbers represent bits transmitted, not application data.

To determine application throughput, you must take into account the Ethernet frame size and the amount of application data in each Ethernet frame. Table 1 has the 1 byte and 1472 byte (maximum application data in a UDP packet) calculations. The formula is:

The TCP conversation rate is the final speed to be considered. In a TCP conversation, not only do you have the raw throughput of the system, you must also take TCP windowing into consideration. The maximum throughput of a TCP conversation, based on the TCP window size is:

The maximum transmit TCP window that Streams TCP (STCP) will use is 32K bytes (64K in 15.1). This is based on the other host's advertised receive window. STCP will use a receive window of 64K, 32K, 16K, or 8K bytes. The one it selects is based on several factors (see Vol 5 of this newsletter for a discussion of window size), but for this discussion I will assume 32K bytes. The round trip time is more difficult to gauge.

Let's assume 0.2 ms for a round trip time — a time that I measured on my network. Note however that this was an average time. The actual times reached up to 0.62 ms. Using 0.2 ms gives a maximum throughput of 163.84 MB (bytes)/sec or 1.31 Gb (bits)/sec. This is obviously not possible, so what it says is that with a round trip time of 0.2 ms, a window size of 32K will not be a bottleneck. Even an 8K window will produce a data rate of 327 Mb/sec. However, if the round trip time climbs to .8ms or higher, a 32K window size will begin to be a problem.

Assuming that the TCP window is not a bottleneck, we are back to being limited by the raw throughput through the system. Because the TCP header is larger than the UDP header, the application data rate will be different. For the 1 byte case, the TCP rate is the same as the UDP rate because, regardless of the header, the 1 byte of data still fits in the minimum Ethernet frame. However, for the maximum size frame, TCP can only accommodate 1460 bytes of application data versus 1472 for UDP data. The result is a TCP application data rate that is slightly lower than the UDP rates.

| Bytes / frame | Receive (15.0) | Receive (15.1) | Transmit |

| 1 | 10.2 Mb/sec | 11.7 Mb/sec | 5.4 Mb/sec |

| 1472 | 630 Mb/sec | 727 Mb/sec | 339 Mb/sec |

| 1460 | 625 Mb/sec | 721 Mb/sec | 336 Mb/sec |

So am I saying that you can expect your application to have the data rates in Table 1? No. Table 1 shows the best possible data rates. This is what you can expect given a perfect universe, and of course nothing else slowing the system down.

In reality, other things will also limit your application's throughput. FTP is not a very good way to measure your "network's" throughput, but it is not all that bad for measuring your "application's" throughput. Of course, a lot depends on how your application works e.g. patterns of reads and writes to the disk, but it is illustrative.

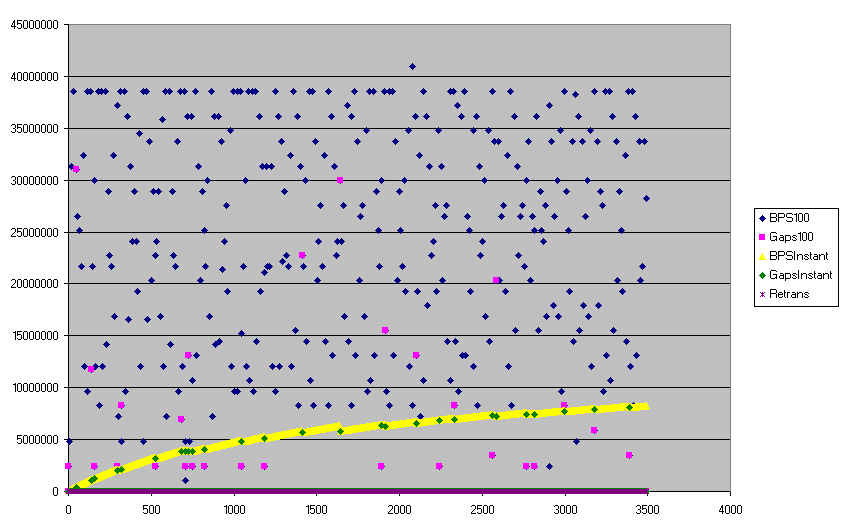

Figure 1 shows an FTP "put" from a V Series system to an ftServer over a gigabit link. There were 3,491 frames that transmitted data over 0.60651 seconds.

Each blue and purple symbol represents the throughput, in bytes per second, for consecutive 0.000607 seconds. The purple symbols indicates a gap in the data transfer; i.e. one or more of the previous 0.000607 seconds had a zero throughput. The yellow and green symbols are cumulative throughput from the start of the transfer. The green symbols indicate a gap in the data.

Figure 1 - Transmitted Bytes Per Seconds

Two things are important to note. First, the FTP command line reported a throughput of 8210K bytes/sec. This agrees with the final cumulative value in the graph. Second, the cumulative total is much less than the typical throughput for a 0.000607 second interval, and much much less than the maximum value. Discounting the 1 data point above 40,000,000, there is a noticeable plateau at 38,515,440.8 bytes per second - which is 301 Mb/sec.

There are 28 "gaps" in the intervals. The minimum gap is two intervals while the maximum gap is 58 intervals. The total number of missing intervals is 41, or approximately 41.8% of the transfer time that nothing was transferred. There are multiple causes for this including the time to read the file being transferred, process switches, and other system overhead.

The bottom line is that your application will probably not be able to send more than 301 Mb/sec, and if it does any kind of I/O or shares the system with any other application processes, you are probably looking at about a quarter of that.